The cellular industry today is patiently looking forward to the deployment of LTE-Advanced, also known as Release 10 of the 3GPP’s Long-Term Evolution (LTE) technology. While the “4G” label has been used to describe many of the services provided by cellular networks today, insiders know that true 4G LTE, as originally defined by the International Telecommunications Union, begins with LTE-Advanced.

The intended goal of 4G technology is to provide higher data throughput rates and better coverage. In order to meet the ITU’s original 4G requirements, the technology must deliver a peak (low-mobility) downlink throughput of 1 Gbps and peak uplink throughput of 500 Mbps. LTE-Advanced also intends to deliver greater peak spectral efficiency of 30 b/s/Hz in the downlink and 15 b/s/Hz in the uplink, approximately double the efficiency of today’s commercial-deployed “4G” technologies.

Toward that end, the industry has focused on three critical areas of improvement in LTE-Advanced: relay nodes, improved radio antenna techniques and carrier aggregation. The latter two directly affect the design and implementation of mobile devices (UE) and are further discussed in this article.

MIMO and Beamforming

Neither Multiple-Input-Multiple-Output (MIMO) nor beamforming antenna techniques are new with LTE-Advanced. Both techniques have been used in other radio communications technologies, besides cellular communications, for some years. In one of the industry’s more interesting challenges, the two techniques are being combined into “MIMO beamforming” and will be a highly significant factor in the TD-LTE rollouts being readied in major markets in Asia.

Technically, MIMO beamforming transmission modes have been defined since the 3GPP’s Release 8. However, the stated performance goals of Release 10 (specifically the data throughput rates in both downlink and uplink) were created based on the assumption that MIMO would be fully implemented. Release 10 introduces a new downlink transmission mode (Transmission Mode 9) that implements beamforming in an 8×8 MIMO scheme. It also officially introduces the use of MIMO in the uplink.

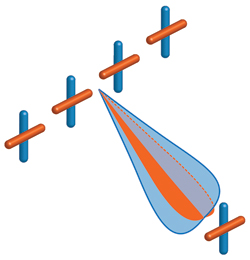

Figure 1 MIMO beamforming – two cross polarized beams.

In MIMO beamforming, multiple antennas are used to create a polarized “beam” of focused energy (shown as orange in Figure 1). A second set of antennas (shown as blue in the diagram) creates a second beam that is cross-polarized in relation to the first. All of this happens in the same frequency band at the same time. The result is that the system can deliver multiple data streams (due to MIMO’s ability to differentiate between the polarized beams) and can target those data streams in specific directions (due to the beams formed).

Both of these techniques are extremely complex, but at a high level, MIMO uses multiple antennas at both the transmitter and receiver to exploit space as a domain in which to increase data rates, or share time/frequency resources between users. At an equally high level, beamforming uses multiple antennas at the transmitter or receiver (or both) to increase coverage by focusing energy in specific spatial directions.

While it is important to note that TD-LTE technology is not characteristic of LTE-Advanced (it has been a part of the LTE family since Release 8), the combination of MIMO and beamforming is extremely important in TD-LTE. The timing of their availability coincides with large-scale TD-LTE deployments being planned in China, India, Japan and elsewhere. More significantly, MIMO and beamforming both require that a transmitter has some knowledge about the radio channel on which it is transmitting; in FDD-based LTE systems, this knowledge is acquired through feedback systems.

TD-LTE has a distinct advantage when it comes to deploying MIMO and beamforming. Since the uplink and downlink frequencies are the same, the eNodeB (base station) transceiver can analyze a received signal and use the collected information to form a reasonable estimate of the transmission channel. This eliminates the need for a feedback loop from the mobile device, which both expedites and eases the implementation of MIMO, beamforming and MIMO beamforming.

While this goal is well worth the effort, this added value comes at the cost of complexity in testing. Today’s specifications include 8 8 MIMO beamforming mode, meaning that in the not-too-distant future, eight antennas will be transmitting and eight will be receiving, all at the same frequency and at the same time. The UE will have to process all received data in order to differentiate between all these data streams. In addition, certain radio characteristics (such as signal phase) take on new importance in MIMO beamforming, bringing complexity and the requirement for a new level of accuracy in the emulated channels used in lab-based testing. A new generation of channel emulation solutions, such as the Spirent VR5, has been developed to address this evolving need.

Carrier Aggregation

Based on the throughput and spectral efficiency requirements of LTE-Advanced, a quick calculation shows that both the uplink and downlink require more than 20 MHz of bandwidth to achieve these targets. Due to the reality of the fragmented spectrum allocated to cellular technologies, finding sufficient contiguous spectrum is not an option in most cases. For this reason carrier aggregation, a distinct feature of Release 10, which addresses this spectrum fragmentation issue, is the most likely LTE-Advanced feature to be deployed on a large scale in the near future.

Carrier aggregation enables high data rates by combining multiple Release 8 carriers to support transmission bandwidths of up to 100 MHz. This approach provides several advantages:

- Backward compatibility with Release 8 and Release 9 channels

- Flexible dynamic scheduling to mitigate varying channel conditions

- Increased throughput rates

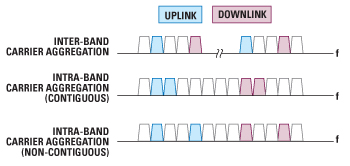

Figure 2 Three types of carrier aggregation.

The spectral “building blocks” of an aggregated carrier are called “component carriers,” each of which is the equivalent of a Release 8 carrier delivered by a separate serving cell. A UE using carrier aggregation will establish a link with one Primary Cell (PCell) and one or more Secondary Cells (SCells). Three types of carrier aggregation are defined: inter-band, contiguous intra-band and non-contiguous intra-band. Figure 2 offers a graphical explanation of these terms. Because of global spectrum fragmentation, most deployments will implement inter-band carrier aggregation.

Carrier aggregation relies on new elements of the Radio Resource Control (RRC), Medium Access Control (MAC) and Physical (PHY) layers:

- RRC layer modifications deal with cell connection and handover processes and are outlined in the 3GPP RRC protocol specification (TS 36.331) and UE radio access specification (TS 36.306)

- MAC sub-layer changes accommodate the use of multiple cells and are described in detail in 3GPP TS 36.321

- PHY layer changes allow such options as cross-carrier scheduling, which enacts all scheduling on a single carrier (thereby reserving SCells for user data)

Other protocol layers such as the Packet Data Convergence Protocol (PDCP) and Radio Link Control (RLC) are not impacted by carrier aggregation. In fact, from the perspective of the user plane the aggregated carrier is a single bearer just like any other.

Other Considerations

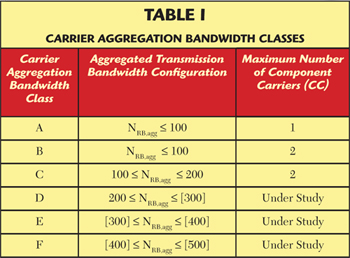

UEs that support carrier aggregation are classified by aggregate bandwidths as a function of frequency, with each resource block occupying up to 200 kHz.

Release 10 includes provisions for six classes, but has only fully defined class A, B and C (as of the time this article was written). Table 1 lists the definition of each class by the number of component carriers (CC) supported, as well as the aggregated resource blocks (NRB,agg). Note that the aggregate bandwidth (BWagg) is a function of frequency, with each resource block occupying up to 200 kHz.

As of Release 10, a UE must be able to report which bands are supported and the carrier aggregation capability for each band. Since some of the operators most interested in deploying carrier aggregation do not own spectrum in the bands defined in Release 10, they will likely deploy the technology in the bands they have available. A more widely-applicable set of configuration scenarios is likely to be defined in Release 11.

Meaningful testing of carrier aggregation techniques needs much more than just a radio-channel emulation solution. It requires the ability to readily create the protocol interactions that can exercise the UE’s ability to manage all the possible combinations of carrier-aggregation scenarios.

Conclusion

LTE-Advanced introduces new device and network capabilities that will have a profound influence on the success or failure of next-generation cellular technology. Two of the more critical features are carrier aggregation and enhanced MIMO beamforming, technologies that add significant complexity to device development and bring us much closer to “True 4G.” Due to the combination of feature importance and complexity in LTE-Advanced, UE testing takes on a new level of consequence. Realization of LTE-Advanced will not only require updates to existing testing tools, but also the creation of new and innovative tools designed specifically to help drive the success of this next generation of global wireless technology.

Michael Keeley is a director of product management at Spirent Communications’ wireless test equipment division. He has led various teams involved in wireless network emulation and automated systems used for testing mobile devices. Prior to joining Spirent in 2000, he worked for Lucent Technologies. He earned his BSEE and MEng from Cornell University and an MBA from New York University’s Stern School of Business.