Autonomous vehicles (AV) are navigating freeways and highways in many regions of the world. Most mass-produced consumer vehicles have some level of autonomy, be it SAE International level 2 or 3.1 The AV industry is moving fast, and its complex socio-technical innovations come with profound economic and social impacts. Reports suggest self-driving vehicles could reduce the number of traffic deaths by as much as 90 percent.2 In addition to saving lives, AVs could save billions in collision costs and enable disabled people a chance to be mobile.

However, setbacks have damaged the public’s opinion and trust in fully autonomous vehicles. In 2018, the first recorded Uber self-driving fatal accident occurred because of human error, according to prosecutors.3 The road to full autonomy will be complex. The automotive industry must overcome multifaceted challenges to realize the vision of autonomous mobility that is safe and robust. Although many of these challenges are difficult to solve, technology limitation is one area the automotive community and equipment manufacturers can advance.

Continuous investments in sensor technologies such as radar, lidar and cameras will improve environmental scanning. Although each sensor type has advantages and disadvantages, manufacturers can combine them to ensure that object detection has built-in redundancy. Improving the testing process and testing earlier during development with real-world scenes and signals will help automakers implement better advanced driver-assistance systems (ADAS) and AVs safely and reliably.

EVOLUTION OF RADAR SENSORS

Powerful software algorithms are necessary to process the large amount of high-resolution sensor data, including inputs from vehicle-to-everything communications. Machine learning is the established method for training self-improving algorithms, which automotive manufacturers will apply to enhance decision-making in complex traffic situations. Validating these algorithms with realistic stimuli, in a repeatable and controlled manner in the lab, is crucial for accurate and safe deployment. Defining appropriate test scenarios, automotive designers and test engineers can render them from 3D simulations and convert them to radar signal inputs that are applied to the vehicle’s radar modules prior to road testing. Building an accurate 3D map of the world outside the vehicle, then converting it to the signals received and interpreted by the radar and electronic control unit are vital steps for developing autonomous driving.

Although automotive radar technology has been widely adopted, the complexity of the sensor front-end and signal processing are increasing dramatically as the following capabilities are added:

- Wider bandwidth, previously 76 to 77 GHz, now 77 to 81 GHz

- Improved accuracy by using MIMO, i.e., multiple transmitting and receiving antennas

- Higher resolution with the fourth dimension of height or elevation

These newer generations of radar sensors that create wide antenna apertures using large MIMO antennas unlock a fourth sensing dimension: height or elevation. 2D and 3D radars do not perceive height because of the lack of MIMO and processing capabilities. 4D sensors offer better perception with increased resolution, using the full 4 GHz bandwidth. Behind the sensor, the detection algorithms must handle the increased complexity, leading to more stringent requirements for testing and validating. Training these sensors requires more than just point targets. In an urban area with high population density, many road intersections and turning scenarios, the radar must differentiate among cars, trucks, cyclists, e-scooters and three-wheeled cargo delivery bikes. The ability to identify and classify this range of objects is crucial to the reaction time of the vehicle and the safety of the passengers.

REIMAGINING TESTING

The conventional way of testing the functions and algorithms of the radar is driving on roads for millions of miles. However, this will not cover all potential scenarios, particularly the one-in-a-million cases. The magnitude of the testing challenge underscores the need for simulation early in development in the lab and with approaches that overcome the shortfalls of current in-lab testing.

To validate the radar sensors, electronic control unit code and artificial intelligence, automotive manufacturers need to emulate real-world scenarios. Testing the physical hardware in a simulated environment close to real-world conditions helps to ensure AVs will behave as expected on the road. To test a radar sensor’s responses, the simulated environment and rendered conditions need to include weather and surrounding objects, as well as the vehicle dynamics and real-time responses to the dynamic road environment.

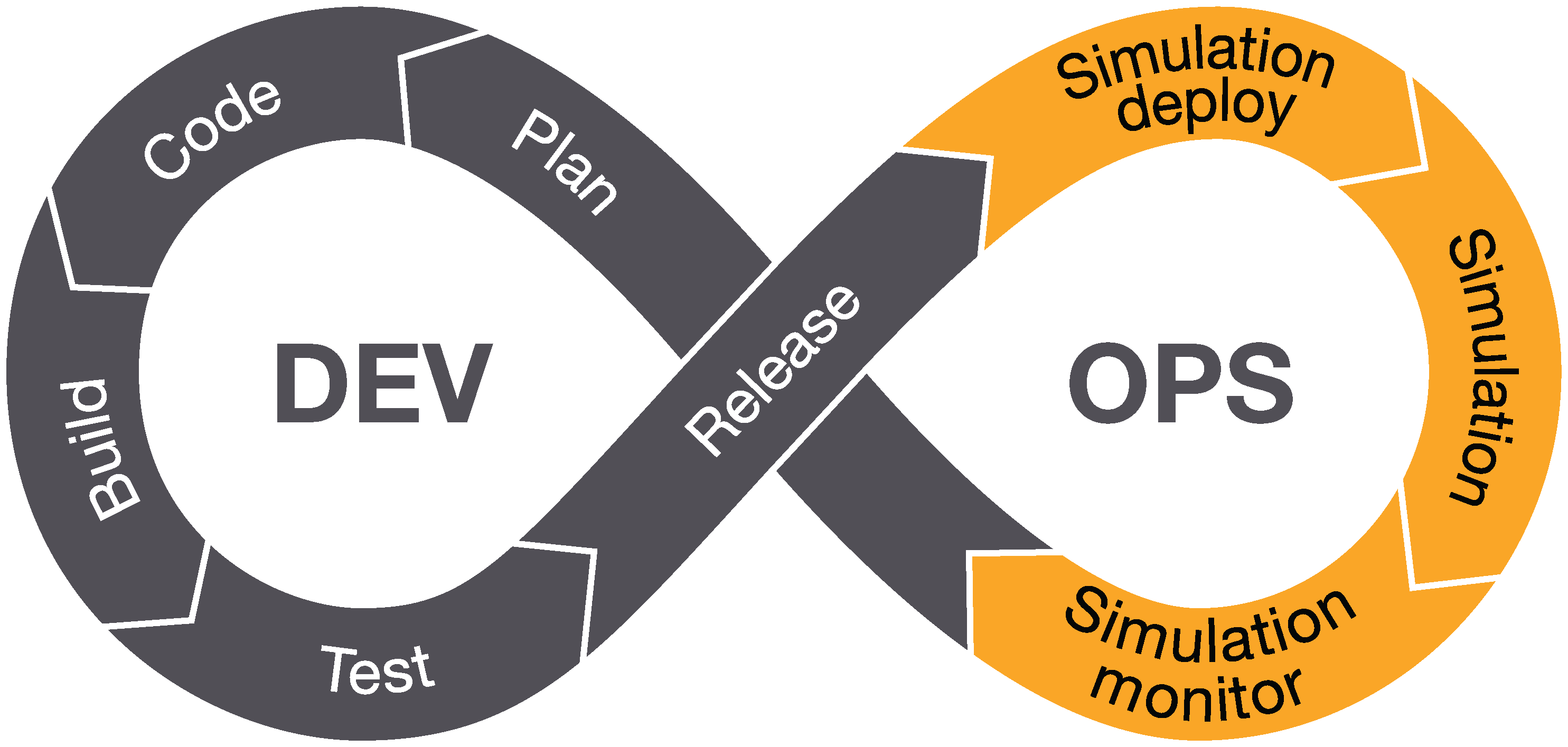

Software engineers use the development and operations (DevOps) model for software application development. This is a continuous cycle from development and test to deployment and back to development, which collects feedback to improve the product with every iteration. The DevOps model is being applied to automotive development as vehicles become more software based. The DevOps model comprises three iterations: simulation, emulation and deployment:

Software Simulation

During simulation, the simulator creates a software environment that mimics the configuration and behavior of an actual device (see Figure 1). Automotive companies spend significant time on sensors and control modules, simulating their environments with software-in-the-loop testing. Automotive design and test engineers integrate, tweak and then loop the tests.

Figure 1. DevOps flow for software-in-the-loop testing during the simulation phase.

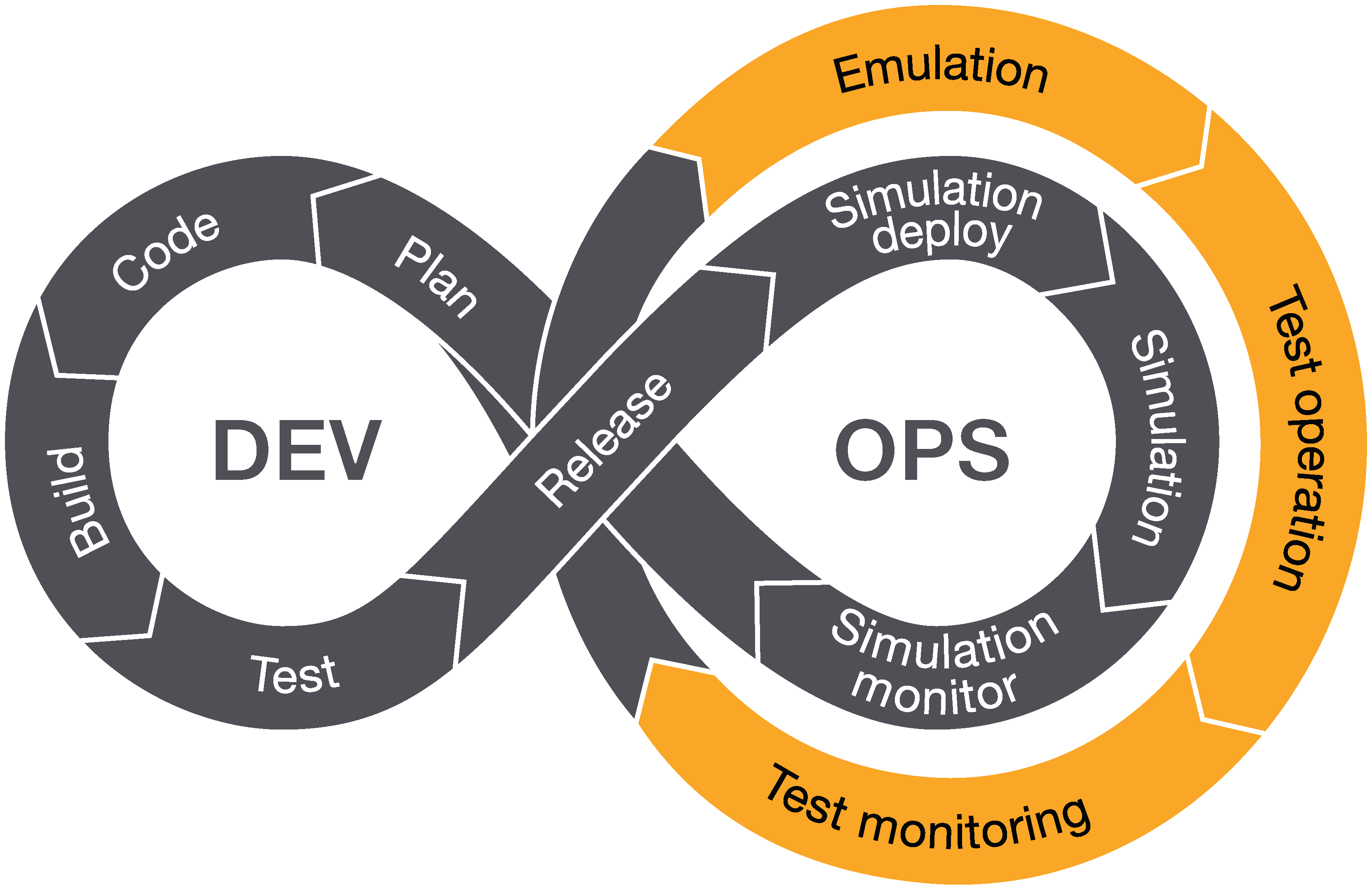

Emulation

After software simulation, the next step is adding hardware components to the test. The idea is to mimic a real device’s hardware and software features, enabling automotive developers to re-create an almost real-life environment for testing. This stage, called hardware-in-the-loop, is where the device is tested against actual components and systems in the lab. It bridges the gap between pure simulation and road testing, saving the time and cost of road testing. As sensor algorithms become more complex and convoluted, emulation is an increasingly important step in ADAS and AV development.

Figure 2. DevOps flow for hardware-in-the-loop testing during the emulation phase.

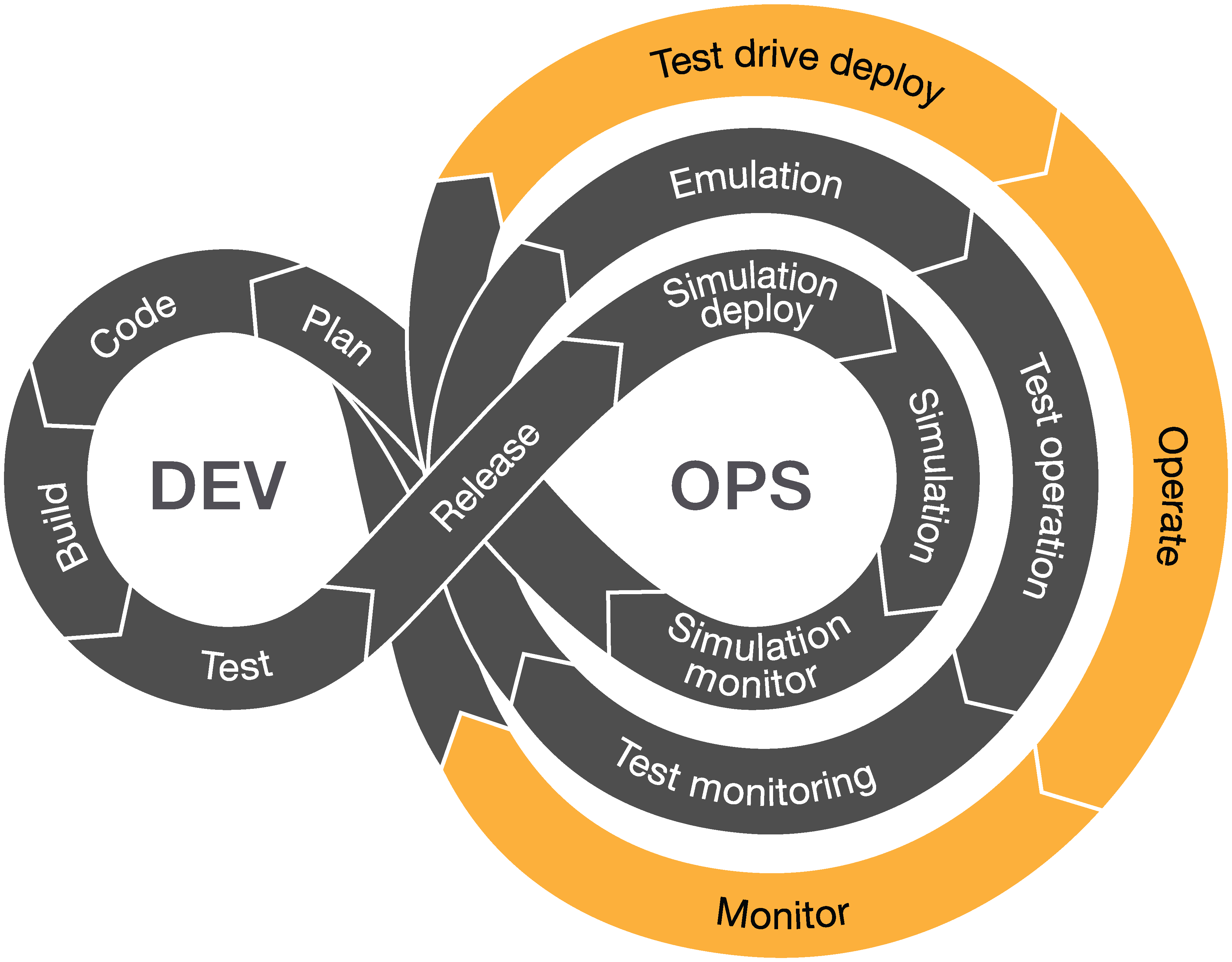

Testing On Open Roads

Open-road or test track testing in a prototype or “road legal” vehicle requires an integrated system on the vehicle. This step validates the final product before releasing it to the market. Road tests are risky and expensive, where failure can require a system redesign, financial losses for the company and prolonged development time.

The test and development results from simulation feed the emulation phase, and those feed road testing. Each builds on the previous phase. The automotive industry employs this test process with many components, such as a brakes or the steering wheel subsystem. However, implementing this same process with the radar sensor has not been straightforward. Most testing systems lack a way to emulate near real-life scenarios with sufficient detail to enable the radar algorithms to learn and iterate, which is necessary to prepare for open-road driving. While simple scenarios can be tested, the complex corner cases were impossible to test, either in a structured lab environment or on the open road.

Figure 3. DevOps flow for road testing a vehicle with integrated ADAS systems.

ASSURING SAFE AUTONOMOUS DRIVING

At SAE level 3 and above, the autonomous driving functions are responsible for more than driver, passenger and pedestrian safety. They oversee every maneuver the vehicle makes on the road, which brings significant legal implications. Understandably, automotive manufacturers are particularly cautious when deploying ADAS capabilities, especially fully automated ones.

The move toward higher levels of vehicle autonomy adds more complexity to crash tests. In the past, a seat belt pretensioner, a side head airbag or a child restraint system would suffice. With AV systems, the test must cover the vehicle’s ability to autonomously break when detecting an object on the road at a certain distance, whether the object is a vulnerable road user, such as a pedestrian or cyclist, or another vehicle cutting in at a lower speed.

Despite the risks, automotive manufacturers are under pressure to shorten the design cycle and get to market faster, which requires earlier testing of complex driving scenarios. Enhancing the capabilities of emulation can help address the comprehensive testing and time to market challenges.

FULL SCENE EMULATION IN THE LAB

Full scene emulation provides the capability to verify a radar system’s integration and performance on the vehicle while in a lab environment and prior to test track or open-road testing. This significantly reduces the risk of late test failures, identifying issues earlier in the development process.

To emulate scenarios in a lab, the output of simulation software is translated into real signals that stimulate the radar modules on the vehicle. This is accomplished as follows:

Point Clouds

Point clouds describe a data set of points that represent an object or set of objects. The points are coordinates in the x, y and z axes and are usually created using 3D simulation software, enabling a large amount of spatial information to be contained in one set. Point clouds add details to a scene, helping algorithms distinguish two objects close together. 3D laser scanners, lidar and radar produce and reference point clouds. While a traditional radar target simulator will return a single reflection independent of distance, emulation increases the number of reflections as the target gets closer. This type of dynamic resolution varies the number of points representing an object as a function of the distance from the object, which is a more accurate representation of the scene.

To accurately create a point cloud to test a radar system, the test system uses two hardware elements: ray tracing and a “wall of rixels.” Ray tracing (see Figure 4) extracts the information from a scene to be relayed to a sensor, such as a radar or camera. The concept of ray tracing was developed for computer graphics, i.e., the capability to render digital 3D objects on 2D screens by simulating the physical behavior of light. To illustrate, Figure 4 shows a light source illuminating an object, where the light rays are reflected and scattered in multiple directions. Only those that converge on the user are mapped on the viewing plane, the camera in the figure. The screen’s resolution is a function of the viewing plane’s characteristics and the object’s fine or coarse meshing. The object rendering includes its material properties and other information such as its color and brightness.

Figure 4. Ray tracing is used to extract information for radar and cameras sensors.

Although this example refers to a visible image, the principle applies to any stimulus-response system based on line-of-sight radiation, including radar. With radar, the light source is the radar transmitter, the material properties include the objects’ reflectivity to the radar radiation and the spatial velocity translates into Doppler frequency shifts. To translate the information extracted from ray tracing into a signal a radar sensor can detect, the emulation creates each vehicle as an object and assigns directions and speeds to each in the simulation.

The wall of rixels is the second hardware element required to create point clouds for the radar sensor being tested. Rixels are RF transceivers or front-ends small enough to fit into a chip-sized unit, each one acting like a pixel on a TV screen. Putting eight of them on one board and stacking multiple boards next to each other, this matrix of rixels creates a high-resolution wall, analogous to a high-definition screen with pixels that display different colors and brightness. Similarly, rixels “display” distance, velocity and object size.

Keysight’s Radar Scene Emulator contains a wall of rixels that provide radar echos modulated to reflect the objects in a driving scenario. The radar scene emulator comprises a 64 x 8 array of rixels that creates a dynamic radar environment. All electronic, the system is stable, repeatable and reliable, able to process more scenarios in less time than systems using mechanically moving antennas.

These miniature rixels are invisible, i.e., non-reflecting, to the radar sensor being tested. They are activated by the 3D simulation software that controls the individual rixel signals to model a scene in front of the test vehicle. Each rixel in the array emulates an object’s distance and echo strength. As objects get closer in the emulation, multiple transmissions from the rixels mirror the actual environment a radar will sense. This capability enables testing the radar’s hardware and algorithms for detecting and differentiating objects, which is critical to assessing performance and safety in congested urban areas.

Figure 5. An emulation distance of 1.5 m for scenarios with objects close to the vehicle.

Verifying the robustness of automotive radar hardware and AV algorithms depends on comprehensive testing. Many test cases — automatic emergency braking, front collision warning, lane departure warning and lane-keeping assistance — require emulating objects very close to the vehicle. Vehicles are typically less than 2 meters apart in every direction at a stoplight. When a vehicle is moving, a two-wheeled bicycle, motorcycle or scooter can swerve into the lane, or a pedestrian may suddenly step into the roadway. To test these cases, the emulation must discriminate distances down to 1.5 meters (see Figure 5). While it is challenging to emulate 1.5 meters, Keysight’s Radar Scene Emulator was designed to do so.

SUMMARY

The goal of the automotive industry is zero accidents, zero emissions and zero congestion. Solving the complex and multifaceted problems to achieve these goals demands innovative technology supported by comprehensive and efficient testing to assess AV performance — particularly one-in-a-million corner cases. Efficiency requires accelerating the speed of testing, which means emulating complex driving scenarios in the lab and eliminating millions of miles of drive tests. Enabling lab testing capable of emulating complex, repeatable, high-density scenes will accelerate the cycles of learning in ADAS and AV sensors.

References

- SAE blog, “SAE Levels of Driving Automation™ Refined for Clarity and International Audience,” May 3, 2021, web.

- Michele Bertoncello and Dominik Wee, “Ten ways autonomous driving could redefine the automotive world,” McKinsey & Company, June 1, 2015, web.

- BBC News, “Uber's self-driving operator charged over fatal crash,” 16 September 2020, web.