5G base station and infrastructure manufacturing is currently one of the hottest areas in communication technology as deployments are accelerating. Success among competitors is generally measured by traditional metrics such as cost of production and integration, productivity and time-to-market. Yet, delivering products in large quantities becomes more important as regulatory bodies start to pave the way to mass installations and delivery bottlenecks may cause significant loss of business. The question is, “How can we manufacture products faster, given the known technical and financial conditions?” There are probably many answers to this question, but in the context of testing of units in production, the answer is likely: “In a given period of time, increase the number of units properly produced and successfully tested, according to the specification.”

Improving the ‘speed of test’ has been an ongoing subject since the dawn of testing endeavors. The ever-increasing frequency of product releases, shorter time-to-market cycles and budgetary constraints call for new ideas on how to improve one of the fundamental metrics particularly important in production environments: test throughput.

Test throughput is essentially a reciprocal figure to the speed-of-test. Besides indicating how fast a single test can be executed, test throughput can additionally address cases where multiple tests are accomplished in parallel. Achieving higher throughput almost always demands some degree of concurrent operation, particularly in cases where speeding up a single test is either technically not possible or too expensive. Forms of parallelization may range from simple duplication to a configuration of independently executed tasks and subtasks that allow fully asynchronous test execution. The speed of test is either difficult or impossible to measure in such a highly parallelized testing environment. In contrast, test throughput remains a valid metric with an increasing significance.

Parallelization of tests must be well thought out; higher throughput rates must not lead to deterioration of quality-related metrics. The more tests that are being executed asynchronously, the more care needs to be put into ensuring that test results remain valid and consistent. Solutions designed for sequential execution are not necessarily suitable for parallel operation. Luckily, technological advancements in the domain of software architecture render such a shift in testing paradigms possible without sacrificing test quality and reproducibility. Solutions supporting parallelized operation are already commercially available.

PROBLEM DESCRIPTION

In the context of emerging 5G infrastructure business, ensuring a high production throughput rate is crucial for success and competitiveness. Clearly, test throughput is one of many factors that influences the production throughput; yet, it is comparatively easy to improve this metric in specific environments if few prerequisites are met.

Traditionally, the execution of 5G production tests is done close to or in conjunction with the product assembly line. Once the product is assembled, a manual or automated set of a multitude of tests, including an RF test, is performed. After test execution and results retrieval, the system decides if the device-under-test (DUT) has fulfilled the testing requirements or not. Lastly, the tested product is sorted accordingly. This is a typical representative of a sequential and synchronous test execution; the following task waits until the previous task has finished, and its result is available. Although RF tests generally do not have a large impact on the overall performance, the complexity of the analysis of the test data increases with tighter testing conditions and thus may render testing throughput worth improving.

While the sequential execution in its simplicity is acceptable for many applications, it also bears one potentially significant impediment: it generally does not scale well. Here, increasing throughput calls for either the installation of one or multiple duplicates of the production and testing equipment, which is expensive, or reduction of testing complexity. The latter may result in a poor overall yield or higher failure rates of devices in operation.

Coming back to the 5G infrastructure manufacturing and testing use case, the 3GPP TS 38.141 specification requires multiple metrics to be evaluated. Error Vector Magnitude (EVM), Operating Band Unwanted Emission (OBUE) / Spectral Emission Mask (SEM) and Adjacent Channel Leakage Power Ratio (ACLR) are the commonly used measurements to specify if a DUT can pass a test or not. All tests are jointly performed in several frequency and output power ranges; the depth and number of tests depend on the product category and its specifications.

Executing a typical production line test on a 5G macro-cell base station can take up to several minutes to conduct. Speeding up this process can significantly improve the throughput rate in production. Repetitive characterization test may require multiple hours of testing scenarios. It is safe to say that by analyzing the complex process of production and testing of devices, one may find a few tasks or subtasks that are executed sub-optimally. Depending on the product and the testing specification the device needs to be tested against, this could be either test data acquisition time or analysis time. Clearly, upcoming higher requirements in 5G FR2 testing (and beyond) will likely result in more complex analyses and thus longer processing times.

BACKGROUND

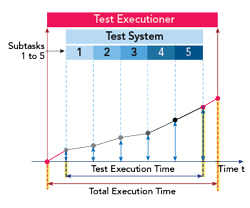

Figure 1 Sequential test scenario.

The concept of parallelization in the context of technology or productivity optimization is nothing new; in the last few decades, the development has been boosted by an increasing number of available parallel processing units (multicore CPUs) and the simplified access to those resources from both programming and operational standpoints. Asynchrony or asynchronous operation has gained more attention in recent years due to the rise of distributed systems. It forms a fundamental construct allowing non-deterministic operation mainly found in communication technology.

The rise of parallelization as a technology aspect paved the way to new problem-solving techniques: instead of focusing on improving individual execution performance, parallelization renders new levels of scalability. By looking at cloud-based technologies available nowadays, it should be clear that speed performance is simpler to implement within a framework allowing parallel processing.

The demands from an economic perspective arise as well; more than ever, it has become important to optimize the cost of usage of assets, such as test equipment, especially in cases where substantial investment is required; metrics such as Return-on-Investment (ROI) or Total Cost of Ownership (TCO) have gained importance. Lastly, new business and cost models such as Operational Expenditures (OPEX) asks for lower upfront spending and thus call for different commercial solutions. This, in turn, increases the importance of a qualified equipment usage metric which allows usage-based business models (such as pay-per-use) to be attractive for both the solution providers and their customers.

APPROACHES

Sequential Operation

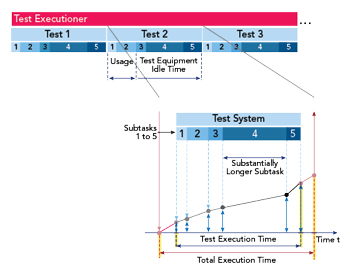

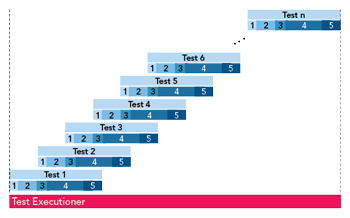

Figure 2 General overview of a test sequence.

Figure 3 Weak parallel test execution.

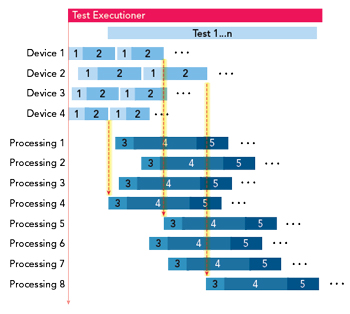

Figure 4 Strongly parallelized operation with decoupled subtasks. Arrows depict the arbitrary selection of processing units for the handling of incoming capture data.

Consider the following scenario: a DUT requires a specific set of tests to be performed upon it to ensure its functionality. Let us imagine each individual test sequence consists of five subtasks: test preparation and equipment setup, stimulus generation and data capture, test data retrieval (transmission), data processing/analysis, and test result delivery as shown in Figure 1. The test sequence is repeated either with a different set of testing parameters or through provisioning of another DUT.

In a sequential approach, subtasks are executed one after the other since the degree of dependency between two adjacent subtasks is high; it makes no sense to start data acquisition before test equipment has been instructed what exactly to capture. Equivalently, analysis of test data cannot happen before the data has been successfully transmitted.

Each subtask has its own execution time. In many cases it is a largely consistent number across test sequences, but it may exhibit some degree of uncertainty and jitter (e.g. in cases of non-deterministic data transmission). In sequential testing, the overall test execution is the sum of individual task execution times. Thus, improving the overall test duration is only possible by accelerating any respective subtask within that test sequence. Some ‘expensive’ subtasks may be subject to optimization but in general there will always be technical (e.g. CPU clock speed) or procedural (e.g. handling or settling time) boundaries which do not allow further execution speedup.

By looking at Figure 2, one can easily identify a further implication: test equipment used in subtasks 1 and 2 returns to idle mode when the respective subtasks have finished and becomes operational only when next test sequence is executed. The period between the two subsequent test sequences determines the utilization ratio; the shorter the period is, the higher the ratio. This is generally favorable; yet, improving this number in pure sequential operation is equally challenging.

Weakly Parallelized Operation

An alternative to a sequential implementation is to consider parallel execution of subtasks, or a set thereof, wherever possible. This may be an interesting approach in cases where individual subtasks require significant time for completion with a certain degree of decoupling rendered possible; any such ‘expensive’ subtask that bears a lower degree of dependency on adjacent tasks is potentially suited for migration to parallel operation.

Consider again the same test sequence of five subtasks needed to complete a test of a single DUT. In subtask 4, data processing and analysis, execution is likely to be computationally expensive, requiring a substantial amount of time to accomplish the task. This is generally found in 5G NR applications given the complex yet tight 3GPP specification requirements the production tests must fulfill. Clearly, parallelization of the execution of this and the following subtasks results in higher throughput.

In most cases, isolating a processing task is possible; subtasks of test preparation, stimulus generation and test data capture do not need to wait for the analysis to be finished and thus can be restarted. Depending on the parallelization capabilities of the processing unit, this process could be set up such that it allows complete decoupling of the expensive subtask, allowing other tasks to be performed much quicker.

This process can be named weak (or incomplete) parallelization since not all subtasks are eligible for parallel execution. As depicted in Figure 3, subtask 2 (capture) must wait for subtask 1 (preparation) to complete. However, once subtask 2 has completed, the subtask 3 (data transmission) can be executed while subtask 1 of the next test iteration is started immediately. In contrast to the sequential execution, the interval where testing equipment idles is also minimized or even eliminated given the immediate reuse for next test.

Strongly Parallelized Operation

In the previous case, performance is limited by two factors: the number of parallel processing units available and the speed of execution of subtasks 1 and 2. While enabling additional parallel processing capabilities nowadays is a relatively simple technical modification or upgrade, accelerating capture time is rather difficult - unless parallelization of the capturing process becomes viable. This is generally done by adding more capture devices to the setup. In that case, the test execution may be considered a strongly (or complete) parallelized operation; this is the case when a high degree of decoupling of subtasks is given.

Consider the ideal case depicted in Figure 4. There are 4 devices available independently delivering capture data, whereas the analysis of the test data can be done by 8 parallel processing units.

In such a case, any arbitrary processing unit that is ‘free’ at a given time can fetch and handle the available data coming from any of the devices. The next available unit does the same, resulting in an asynchronous operation. In addition, each device may have a specific capture duration, resulting in slower or later provisioning of the capture data. This has no significant impact on the overall performance since the processing units are rather agnostic towards ‘who delivers data when’; they simply process any capture data available independently of its source.