Over the past decade, the importance of RF-based systems on a chip (SoC) has grown significantly, following the exponential growth of wireless electronic devices in virtually all industry segments. Today, semiconductor companies are manufacturing billions of RF devices that are shipped inside of consumer, industrial and military products. The performance of the RF functionality within these SoCs is now considered just as important to the success of that SoC in the marketplace as the performance of the processor cores and memories.

One of the benefits of following in the footsteps of semiconductor processors and memories is that the RF industry can leverage the accumulated knowledge of manufacturing best practices from earlier semiconductor products. One such area is adopting a better way to measure yield and production productivity: good units per hour. Good units per hour measures the number of quality semiconductor chips produced and shipped each hour. The more shipped, the better the margins.

Big Data Access and Analysis is Key

How can companies balance yield, quality and productivity to produce more good units per hour? Accessing and analyzing real time “big data” on the manufacturing floor is the key.

Each business unit in a global semiconductor company is charged with meeting specific and unique goals. For manufacturing, yield is a key metric; for product planning, the number of good die shipped to paying customers is what matters; the finance department’s main goal is to minimize the cost of goods sold to maximize margins and company profitability. With each operational unit singularly focused on its individual goal, communication across units is often irregular and sometimes nonexistent. Business units are rarely housed in the same building – often they are on different continents – and meet only once a quarter to discuss business goals. The data from business units is typically siloed and not accessible to other business units.

With infrequent communication between departments and compartmentalized data, how can companies bridge this information gap to align goals and deliver more good units per hour? Analyzing manufacturing data that is tracked, validated and monitored by all the key parties in near real time is the secret to aligning goals for quality, yield and productivity. For example, analyzing test data can identify weaknesses in the test process and areas for improvement that will simultaneously increase product yield and equipment utilization. While automated test equipment delivers millions of data points each day, extracting actionable decisions from the data is difficult to accomplish without a big data solution.

Traditionally, a significant time lag – up to 60 days – separated when a chip was tested on the manufacturing floor and when the test data was available to the product or quality engineers responsible for the device. Manufacturing problems found in the data were acted upon long after the devices left the manufacturing floor. Chips that might have been recovered to increase yield were lost, and chips of poor or unknown quality were often shipped.

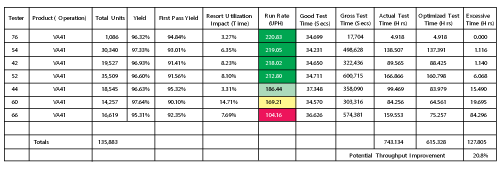

Figure 1 If all testers of product VA41 ran as efficiently as Tester 76, throughput could be increased by over 20 percent.

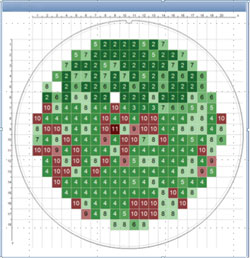

Figure 2 Companies can monitor the number of times good die has been probed and quarantine any die that has been probed more than "n" times.

Big data solutions enable the consolidation of test data from multiple facilities, whether internal or outsourced, into a single database. Yield and productivity data are easily compared, providing a check on quality. Seeing which facility is achieving the best performance motivates capacity improvement across the entire test fleet (see Figure 1). Adopting good die per hour as a metric aligns the entire semiconductor organization – engineering, operations and finance – and delivers higher quality to customers.

Early Error Detection and Intervention

Before the advent of big data solutions in test, errors (whether equipment, human or operational) were largely unresolved by manufacturing operations. The time to find manufacturing issues and the large volume of data were prohibitive. Test engineers were traditionally forced to spend too many hours defining test limits and determining the correct test automation. Now, big data solutions automate and simplify manual processes through rule-based algorithms and analytic capabilities, making it possible to identify incorrect or broad test limits that are essentially meaningless. By establishing common test rules and algorithms within the data itself, operations teams can optimize the test limits for each test procedure in wafer sort or final test – dramatically increasing productivity.

Equipment failure is a daily problem. Undetected by operations, rare anomalies can occur, resulting in unreliable test results. For example, a tester instrument can “freeze”, repeating the same measurement value for multiple parts, or it may skip some of the required tests. Without performing 100 percent of the tests on 100 percent of the die, chips are labeled “bad” in the sort test and have no opportunity for retesting at final test. In other cases, perfectly good chips are discarded because of incomplete or faulty test results caused by human or environmental factors. The potential for test escapes, allowing bad chips to proceed after test, is an even bigger issue: a bad die may pass because of insufficient testing, risking a future product recall.

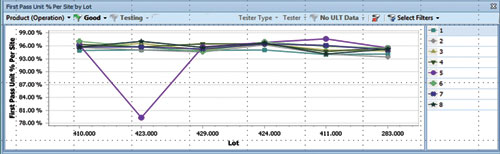

Figure 3 Yield loss due to probe card issues is a common occurrence. Identifying the problem early allows the issue to be resolved quickly, resulting in improved yield.

Operational problems, such as probing too many times and ruining die integrity, can also be detected in real time (see Figure 2). Other benefits of rules-based big data solutions include setting tester limits so that each die is tested within the appropriate parameters and temperatures. Using relaxed limits or only optimal temperatures, bad die will pass through to assembly and final test, hurting product quality. Analyzing test data in real time can identify and quickly resolve a problem before it reduces yield, quality or productivity (see Figure 3).

What practices can companies use to maximize good die per hour? By analyzing high-quality, comprehensive and consistent data from all the manufacturing operations, engineers can establish rules based on proven best practices that will minimize test time and maximize results. Quick fixes include setting bin limits to realize yield momentum. Maximizing the allocation of prober cards and testers, operations can set specific test rules and limits across the company’s global manufacturing supply chain.

Tracking Good Die per Hour and Preventing Escapes

Semiconductor companies use several methods and metrics to track good die. Unfortunately, the common measure of defective parts per million (DPPM) is measured after shipment. Companies have few opportunities to track die quality in test and assembly unless they can access real-- time data from the testers. Test escapes are a key concern, especially for companies in the automotive industry where escapes can have serious consequences. No company wants to deliver a defective chip that is used in an anti-lock braking (ABS) system.

A key benefit to leveraging big data in manufacturing is applying lean and Six Sigma principles to inline testing. By looking at outlier die and applying statistical methodologies, operations can determine if seemingly good die are imposters. Chances are, if a good die is in a bad neighborhood, the likelihood of the die being viable is slim. This is where the chip’s individual DNA can be scrutinized through wafer sort, assembly and final test to determine its true quality.

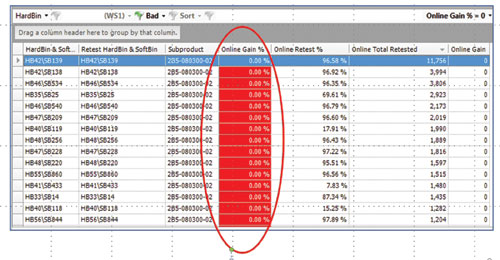

Figure 4 Test analytics clearly show that the current retest plan has NO IMPACT on yield recovery, resulting in higher test time and test costs with no benefit.

The ultimate benefit of deploying big data solutions in test comes from accessing and analyzing all available data from the operations floor. This is the key to marrying the goals of yield, quality and productivity and ultimately producing more good die per hour. When all units of a business are working together and using the same data, improvements at every level provide huge dividends. Whether it’s preventing escapes, reducing test time or determining the likelihood of bin recovery (see Figure 4), big data solutions provide real time, data-driven information that helps business units work together and consistently and predictably deliver more good units per hour.

Over the past few years, many of the world’s largest semiconductor companies have deployed big data solutions in their manufacturing operations. This enabled them to pinpoint test program issues, develop best-in-class test protocols and increase tester productivity. These initiatives have improved communication within the companies, with increased yield, quality, productivity and profits.