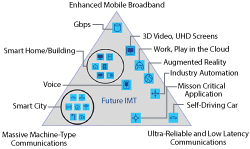

Figure 1 5G application scenarios defined by IMT-2020.

Any next-generation mobile communications technology has to provide better performance than the previous generation. With the transition from 3G to 4G, for example, theoretical peak data rates spiked from around 2 Mbps to 150 Mbps. Subsequently, LTE-Advanced Pro has reached Gbps peak data rates, with 1.2 Gbps data throughput recently demonstrated.1 In a recent survey on 5G conducted by Qualcomm Technologies and Nokia,2 86 percent of the participants claimed that they need or would like faster connectivity on their next-generation smartphones. The conclusion that can be drawn from this is that data rates are always a driver for technology evolution.

But 5G is not only targeting higher data rates. The variety of applications that can be addressed with this next generation is typically categorized into what is commonly called the “triangle of applications,” shown in Figure 1. The hunt for higher data rates and more system capacity is summarized as enhanced mobile broadband (eMBB). Ultra-reliable low latency communications (URLLC) is the other main driver, with an initial focus on low latency. The requested lower latency impacts the entire system architecture—the core network and protocol stack, including the physical layer. Low latency is required to enable new services and vertical markets, such as augmented/virtual reality, autonomous driving and “Industry 4.0.” The triangle is completed by massive machine-type communication (mMTC); however, initial standardization efforts are focusing on eMBB and URLLC. All these applications have different requirements and prioritize their key performance indicators in different ways. This provides a challenge, as these different requirements and priorities have to be addressed simultaneously with a “one fits all” technology.

Pre-5G vs. 5G

It takes quite some time to define a “one fits all” technology within a standardization body, such as the 3rd Generation Partnership Project (3GPP). Several hundred companies and organizations are contributing ideas recommending how the challenges and requirements of 5G should be addressed. The proposals are discussed and evaluated and, finally, a decision is made on how to proceed. At the beginning of defining a new technology and standard that address the radio access network, air interface and core network, the process can be quite time consuming—time that some network operators do not have.

Often, one application is addressed and, in that case, a standard is developed that targets just one scenario. LTE in unlicensed spectrum (LTE-U) is one example in 4G. The goal was to easily use the lower and upper portion of the unlicensed 5 GHz ISM band to create a wider data pipe. 3GPP followed with its own, standard-embedded approach called licensed assisted access (LAA) about 15 months later. 5G is no different. Fixed wireless access (FWA) and offering “5G services” at a global sports event like the 2018 Winter Olympic Games in Pyeongchang, South Korea are two examples within the 5G discussion. For both, custom standards were developed by the requesting network operator and its industry partners. Both these standards are based on LTE, as standardized by 3GPP with its Release 12 technical specifications, enhanced to support higher frequencies, wider bandwidths and beamforming technology.

Take the example of FWA. The network operator behind this requirement is U.S. service provider Verizon Wireless. Today’s service providers do not just offer traditional landline communications and wireless services; they also supply high speed internet connections to the home and are expanding into providing content through these connections. Verizon’s initial approach to bridge the famous “last mile” connection to the home was fiber to the home (FTTH). In some markets, Verizon sold that business to other service providers, such as Frontier Communications.3 To enhance its business model, Verizon is developing its own wireless technology for high speed internet connections to the home. To be competitive and stay future proof, Gbps connections are required that outperform what is possible today with LTE-Advanced Pro.

The achievable data rates over a wireless link depend on four factors: the modulation, achievable signal-to-noise ratio (SNR), available bandwidth and whether multiple-input-multiple-output (MIMO) antenna technology is used. From the early 90s to the millennium, the wireless industry optimized its standards to improve SNR and, thus, data rates. At the turn of the century and with the success of the Internet, this was no longer acceptable; bandwidth was increased up to 5 MHz with 3G. Initially with 4G, wider bandwidth—up to 20 MHz—was introduced, as well as 2 × 2 MIMO. Today, with higher-order modulation up to 256-QAM, 8 × 8 MIMO and bundling multiple carriers in different frequency bands using carrier aggregation (CA), peak data rates have reached 1.2 Gbps. To further increase data rates, for the use case of FWA, in particular, wider bandwidths are required. This bandwidth is not available in today’s sweet spot for wireless communications—between 450 MHz and 6 GHz. More bandwidth is only available at higher frequencies with centimeter and mmWave wavelengths. But there is no free lunch. Moving up in frequency has its own challenges.

HIGH FREQUENCY CHALLENGES

Analyzing the free space propagation loss (FSPL), path loss increases as frequency increases. Wavelength (λ) and frequency (f) are connected through the speed of light (c), i.e.,

λf = c

and as frequency increases, wavelength increases. This has two major effects. First, with decreasing wavelength the required spacing between two antenna elements (usually λ/2) decreases, which enables the design of practical antenna arrays with multiple antenna elements. The higher the order of the array, the more the transmitted energy can be focused in a specific direction, which allows the system to overcome the higher path loss experienced at cmWave and mmWave frequencies. The second effect relates to propagation. Below 6 GHz, diffraction is typically the dominating factor affecting propagation. At higher frequencies, the wavelengths are so short that they interact more with surfaces, and scattering and reflection have a much greater effect on coverage.

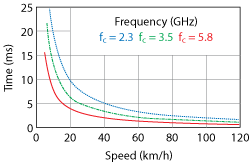

Figure 2 Coherence time vs. speed for three carrier frequencies below 6 GHz.

mmWave frequencies also challenge mobility. Mobility is dependent on the Doppler shift, fd, defined by the equation:

fd = fcv/c

where fc is the carrier frequency and v is the desired velocity that the system supports. The Doppler effect is directly related to the coherence time, Tcoherence, which may be estimated with the approximation:

Tcoherence ≅ 1/(2fd )

Coherence time defines the time the radio channel can be assumed to be constant, i.e., its performance does not change with time. This time impacts the equalization process in the receiver. As shown in Figure 2, the coherence time decreases with increasing speed. For example, to drive 100 km/h and maintain the link at a carrier frequency of 2.3 GHz, the coherence time is about 2 ms. That means the radio channel can be assumed to be constant for 2 ms. Applying the Nyquist theorem, with a time period of 2 ms, two reference symbols need to be embedded in the signal to properly reconstruct the channel. Figure 2 shows that coherence time decreases at higher frequencies. For cmWave frequencies, the Doppler shift is already 100 Hz at walking speed, and it increases with higher velocity. Thus, the coherence time decreases significantly, making the use of cmWave and mmWave frequencies in high-mobility scenarios inefficient. This is the major reason why 3GPP’s initial focus standardizing the 5G new radio (5G NR) is on the so called non-standalone (NSA) mode, using LTE as the anchor technology for the exchange of control and signaling information and for mobility. With FWA, mobility is not required, so Verizon’s technology approach can completely rely on mmWave frequencies, together with the exchange of control and signaling information between the network and connected device.

28 GHz LINK BUDGET

As explained, the use of antenna arrays and beamforming enables the use of mmWave frequencies for wireless communication. Verizon targets the 28 GHz frequency band that was allocated by the FCC as 5G spectrum in 2016,4 with a bandwidth up to 850 MHz. With its acquisition of XO Communications in 2015,5 the operator gained access to 28 GHz licenses and is planning to use these for its initial roll-out of its own (Pre-)5G standard, summarized under the name 5G Technical Forum.6

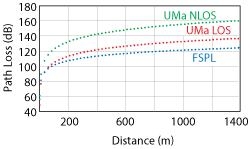

Figure 3 28 GHz path loss vs. cell separation, comparing FSPL with LOS and NLOS for an urban macro deployment, using the ABG channel model.

From an operator’s perspective, the viability of a new technology depends on fulfilling the business case given by the business model. The business case is governed by two main factors: the required capital expenditure (CAPEX), followed by the cost to operate and maintain the network, referred to as OPEX. CAPEX is driven by the number of cell sites deployed, which depends on the required cell edge performance (i.e., the required data rate at the cell edge) and the achievable coverage. cmWave and mmWave allows beamforming that helps overcome the higher path loss, but coverage is still limited compared to frequencies below 6 GHz, the primary spectrum being utilized for wireless communications.

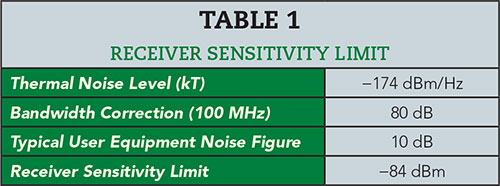

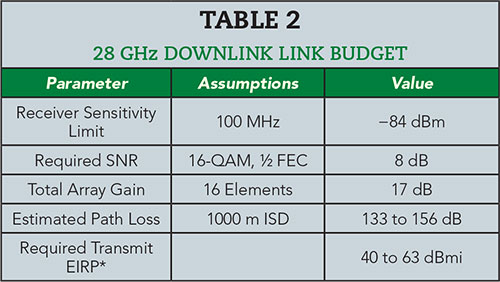

To ensure adequate coverage, a link budget analysis is essential. Considering the 28 GHz band with 100 MHz carrier bandwidth, first the receiver sensitivity limit is calculated. The thermal noise level is ‐174 dBm/Hz and needs to be adjusted to the supported bandwidth of 100 MHz per component carrier, as defined in the 5GTF standard. In this calculation, the typical noise figure used for the receiver is 10 dB, which results in an overall receiver sensitivity limit of

‐84 dBm/100 MHz (see Table 1). Next, the expected path loss is determined. Free space path loss is based on a line-of-sight (LOS) connection under ideal conditions. In reality, this is not the case, so extensive channel sounding measurement campaigns have been executed by various companies with the help of educational bodies, resulting in channel models describing the propagation in different environments and predicting the expected path loss. These are typically for LOS and non-LOS (NLOS) types of connections. With FWA, NLOS connections are normally used. Early on, Verizon and its industry partners used their own channel models, despite 3GPP working on a channel model for standardizing 5G NR. There are, of course, differences between these models. For the link budget analysis considered here, one of the earliest available models is used.7

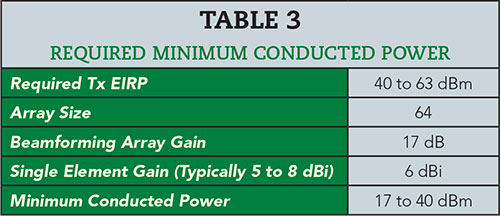

Assuming an urban macro (UMa) deployment scenario, Figure 3 displays the expected path loss at 28 GHz for LOS and NLOS connections compared to FSPL. From an operator’s perspective, a large inter-cell site distance (ISD) is desired, since the higher the ISD, the fewer cell sites are required and the lower the CAPEX. However, the link budget determines the achievable ISD. Various publications show that an ISD of 1000 m is a deployment goal. Such an ISD results in a path loss of at least 133 dB for LOS and 156 dB for NLOS links using the alpha beta gamma (ABG) channel model. The next step is to decide on the required cell edge performance, i.e., the required data rate. The data rate per carrier depends on the modulation, MIMO scheme and achievable SNR. A typical requirement is, for example, to achieve a spectral efficiency of 2 bps/Hz, i.e., 200 Mbps for a 100 MHz wide channel. To achieve this, an SNR of around 8 dB is required, which increases the receiver sensitivity limit further. However, as the receiver is using an antenna array, beamforming gain is available, determined by the gain of a single antenna element and the total number of elements. A good approximation in this early stage of 5G development is 17 dBi for the total receive beamforming gain. Based on the estimated path loss, the required total equivalent isotropic radiated power (EIRP) and the required conducted transmit power can be determined. Given the above calculations, the required total EIRP for the transmit side is between 40 and 63 dBm (see Table 2). It is a fair assumption that using a larger antenna array at the 5G remote radio head results in larger beamforming gain. Table 3 provides an ideal calculation of what conducted power is required to provide the required EIRP (17 to 40 dBm). For mmWave components, these are high output powers, and it is a challenge to the industry to design power amplifiers and the required circuitry to drive the RF front-end and antenna arrays. As not all substrates can provide such a high output power, the industry faces a philosophy battle among companies designing these RF components. One of the challenges is to provide components with an acceptable power-added efficiency to handle the heat dissipation.

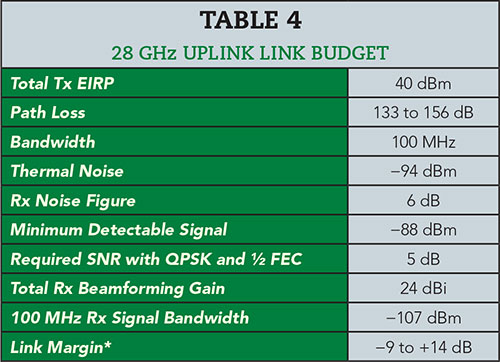

Based on this analysis, establishing a viable communication link in the downlink direction with an ISD of 1000 m is possible. However, previous generations of wireless technologies were uplink power limited, and 5G is no exception. Table 4 shows the uplink link budget assuming a maximum conducted device power of +23 dBm and the form factor of a customer premise equipment (CPE) router with a 16-element antenna array. Depending on the path loss and the assumed channel model, a link margin can be calculated that spans quite a range (i.e., ‐9 to +14 dB). Everything below zero indicates, of course, that the link cannot be closed. Based on these rather ideal calculations, it can be concluded that an uplink at mmWave frequencies with an ISD of 1000 m is problematic.

For that reason, 3GPP defines a 5G NR user equipment (UE) power class that allows a total EIRP of up to +55 dBm.8 Current regulations in the U.S. allow a device with such a high EIRP but not in a mobile phone form factor.9 However, achieving this EIRP is a technical challenge by itself and may come to the market at a much later stage. From that perspective, a service provider should consider a shorter ISD in its business case. Current literature and presentations at various conferences indicate that cell sizes of 250 m or less are being planned for the first-generation of radio equipment. Now it needs to be determined if a shorter ISD, such as 250 m, fulfills the business case for 5G mmWave FWA.

5GTF INSIGHTS

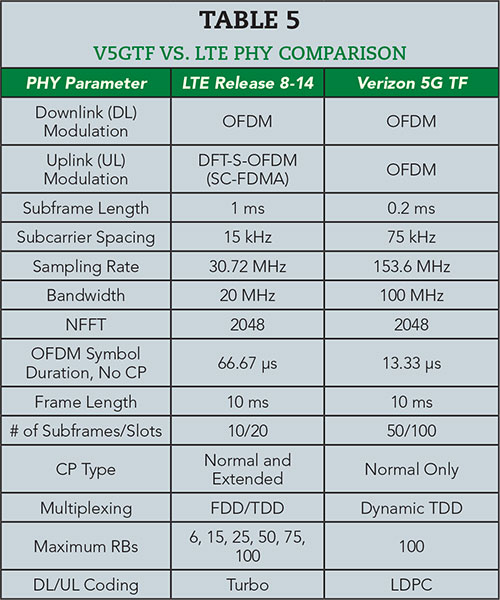

The Verizon 5G standard uses the existing framework provided by 3GPP’s LTE standard. Moving up in carrier frequency and factoring the increasing phase noise at higher frequencies, wider subcarrier spacing is required to overcome the inter-carrier interference (ICI) that will be created. The Verizon standard uses 75 kHz instead of 15 kHz. A comparison of all major physical layer parameters is given in Table 5.

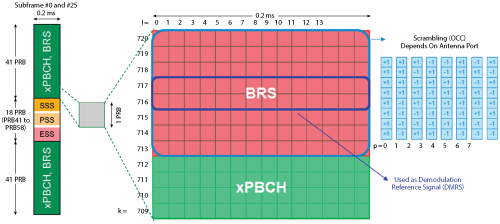

Figure 4 5GTF synchronization and beamforming reference signals.

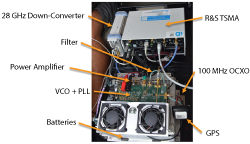

Figure 5 R&S 5GTF coverage measurement system.

In determining 5G network coverage, several physical signals should be understood. Compared to LTE, the synchronization signals (PSS and SSS) are transmitted in Verizon’s 5G standard in a frequency-division multiplexing (FDM) technique, versus the time-division multiplexing (TDM) approach for LTE. Also, a new synchronization signal is introduced, the extended synchronization signal (ESS) that helps to identify the orthogonal frequency-division multiplexing (OFDM) symbol timing. Figure 4 shows the mapping of the synchronization signals (SSS, PSS, ESS) contained in special subframes 0 and Z5; they are surrounded by the beamforming reference signal (BRS) and extended physical broadcast channel (xPBCH).

A device uses the synchronization signals during the initial access procedure to determine which 5G base station to connect to and then uses the BRS to estimate which of the available beamformed signals to receive. The standard allows for a certain number of beams to be transmitted, the exact number depending on the BRS transmission period. This information is provided to the device via the xPBCH. In its basic form, one beam is transmitted per OFDM symbol; however the use of an orthogonal cover code (OCC) allows for up to eight beams per OFDM symbol. Depending on the selected BRS transmission period —there are four options: one slot, one, two or four subframes—multiple beams can be transmitted, on which the CPE performs signal quality measurements. Based on these BRS received signal power (BRSRP) measurements, the CPE will maintain a set of the eight strongest beams and report the four strongest ones back to the network. In general, the same principles apply as for determining coverage for existing 4G LTE technology. A receiver (network scanner) first scans the desired spectrum, in this case 28 GHz, for synchronization signals to determine the initial timing and physical cell ID that is provided by PSS and SSS. The ESS helps to identify the OFDM symbol timing. The next step is to perform quality measurements—same as a CPE would do —on the BRS to determine which has the best receive option and maintain and display the set of eight strongest received beams.

Figure 6 Using the R&S system in the field.

Due to the aggressive timeline for early 5G adopters, Rohde & Schwarz has designed a prototype measurement system that uses an ultra-compact drive test scanner covering the frequency bands up to 6 GHz. This frequency range is extended by using a down-conversion approach: down-converting up to eight 100 MHz wide component carriers transmitted at 28 GHz into an intermediate frequency range that can be processed by the drive test scanner. The entire solution is integrated into a battery-operated backpack, enabling coverage measurements in the field, for example, in office buildings. Figure 5 shows the setup and its components, and Figure 6 shows the scanner being used during a walk test in a residential neighborhood.

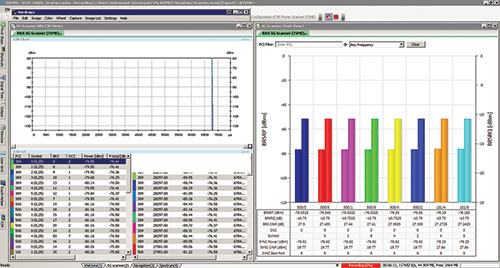

An example of the measurement results is shown in Figure 7. In the screen to the right, the eight strongest beams for all detected carriers (PCI) are plotted, including the discovered beam index. The two values below the actual bar show the PCI (top), secondly the beam index. The beams are organized based on the best carrier-to-interference ratio (CINR) being measured for the BRS, rather than BRSRP. At the top of the screen, the user can enter a particular PCI and identify the eight strongest beams for that carrier at the actual measurement position. Also, the scanner determines the OFDM symbol the beam was transmitted in, as well as which OCC was used. Based on the measured BRS CINR, a user can predict the possible throughput at the particular measurement position. Next is the measured synchronization power and CINR for the synchronization signals. In a mobile network, based on the CINR, a device would determine if the detected cell is a cell to camp on. That is usually determined based on a threshold defined as a minimum CINR based on the synchronization signals. This is −6 dB for LTE and, for pre-5G, is being evaluated during the ongoing field trials. In Verizon’s 5GTF standard, the synchronization signals are transmitted over 14 antenna ports that ultimately point these signals in certain directions. Therefore, the application measures and displays synchronization signal power, CINR and, in addition, the identified antenna port.

Figure 7 Example 5GTF coverage measurements.

SUMMARY

As discussed throughout this article, the business case for using mmWave frequencies in a FWA application scenario stands or falls depending on whether the link budget can be fulfilled at an affordable ISD. When deploying 5G FWA, network equipment manufacturers and service providers will require optimization tools to determine the actual coverage before embarking on network optimization.

References

- www.rohde-schwarz.com/us/news-press/press-room/press-releases-detailpages/rohde-schwarz-and-huawei-kirin-970-demonstrate-1.2-gbps-press_releases_detailpage_229356-478146.html.

- www.qualcomm.com/documents/5g-consumer-survey-key-motivations-and-use-cases-2019-and-beyond.

- www.businesswire.com/news/home/20160401005508/en/Frontier-Communications-Completes-Acquisition-Verizon-Wireline-Operations.

- www.wirelessweek.com/news/2016/07/fcc-unanimously-opens-nearly-11-ghz-spectrum-5g.

- www.fiercewireless.com/wireless/verizon-confirms-xo-spectrum-28-ghz-and-39-ghz-bands-will-be-used-5g-tests.

- 5gtf.org/.

- Propagation Path Loss Models for 5G Urban Micro and Macro-Cellular Scenarios, May 2016, arxiv.org/pdf/1511.07311.pdf.

- www.3gpp.org/ftp/TSG_RAN/WG4_Radio/TSGR4_84Bis/Docs/R4-1711558.zip.

- apps.fcc.gov/edocs_public/attachmatch/DOC-340310A1.pdf.