What is 5G and Why is it Needed?

Since the cell phone was first introduced many years ago, cellular infrastructure has undergone many transformations. The first generation cellular networks were based on “analog” technology such as Advanced Mobile Phone Service (AMPS). The second generation (2G) systems featured digital technology utilizing standards such as Global System Mobile (GSM). In terms of capability, 2G added basic SMS (texting) to voice with limited wireless data capability. Web browsing on a 2G mobile device was limited. Wireless data was driven by texting, email and static photo transfers.

3G, or third generation networks, added a higher speed data capability where limited video could be transferred using Wideband Code Division Multiple Access (W-CDMA). Later evolutions of 3G included HSPA and HSPA+ (the equivalent of 3.5G) and delivered an enhanced user experience. However, big data applications such as streaming video were slow compared to WiFi or Wireless LAN speeds, which most consumers used as a comparison benchmark.

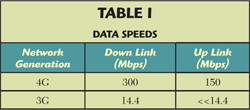

Today, network service providers are rolling out fourth generation (4G) networks based on Long Term Evolution (LTE). LTE offers significant upgrades over 3G in terms of data throughput with up to five to six times faster peak rates (see Table 1). Most service providers plan to transition to LTE-Advanced, or 4.5G, which is expected to double the available bandwidth from LTE. With LTE and LTE-Advanced, wireless data consumers now have a communication technology that rivals current WiFi in terms of user experience.

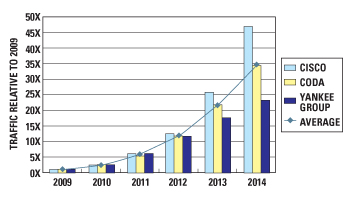

On the surface, future wireless data capabilities along the LTE trajectory appear to approach parity with WiFi from a user experience perspective, ostensibly reducing or mitigating the need for quantum leaps in increased bandwidth. However, with the rapid adoption of smart devices such as smartphones, tablets and even fablets, network capacity and bandwidth are being consumed at accelerated rates. In fact, industry analysts predict that wireless data demand will exceed 2009 levels by over 35× in 2014 (see Figure 1), and this growth rate is not expected to subside any time soon. Capacity is, in effect, a function of bandwidth. More bits transmitted faster free up spectrum for other users and their data demands. Doubling the data rate effectively increases the capacity by 2×. Therefore, the primary motivation for investments in 5G research is to increase network capacity via increased bandwidth and to avoid a capacity shortage.

Figure 1 Industry forecasts of mobile data traffic (From Mobile Broadband: The Benefits of Additional Spectrum, FCC Report 10/2010).

Figure 1 Industry forecasts of mobile data traffic

(From Mobile Broadband: The Benefits of Additional Spectrum,

FCC Report 10/2010).

Are LTE and LTE-Advanced Not Sufficient to Address Consumer Demand?

Considering the rate at which wireless users are consuming data, there is genuine concern across the industry that network capacity may become constrained in the not too distant future without significant technology upgrades. Let’s take, for example, current LTE quoted rates, 300 Mbps in the downlink and 150 Mbps in the uplink. These rates are about four to five times faster than 3G and 3.5G technologies. LTE-Advanced may essentially further double to quadruple data rates. So, in a span of 10 to 15 years, the world’s cellular operators increased capacity by 20×. In that same time frame, “demand” increased by more than 100×. It’s clear that LTE-Advanced is necessary and that a new fifth generation network is critical. Wireless infrastructure companies and other members of the 3GPP standardization body, in fact, have set out a challenge to increase capacity by “1000× by 2020”(www.cvt-dallas.org/MBB-Nov11.pdf).

How Will 5G Address the “Bandwidth/Capacity” Crunch?

First of all there is much discussion regarding 5G – what it will be or what it will not be. We do know that 5G will have to be much faster than today’s 4G networks and the eventual LTE-Advanced (sometimes referred to as 4.5G). The real question is how we achieve faster performance and high capacity with the current infrastructure including existing equipment, available spectrum and so on. The 3GPP standardization body is establishing an investigative group to explore the next generation wireless question, which will hopefully be kicked off early next year. The consensus is that there is no “silver bullet” or one technology that will lead to the necessary bandwidth expansion, but a combination of advancements such as heterogeneous networks encompassing small cells and coordinated multipoint, reallocation of spectrum, and other advanced techniques such as self-organizing networks (SON).

What Technologies are Being Investigated to Support a Potential 5G Standard?

Several technologies are being researched today to increase spectrum efficiency and lower the intercell interference such as heterogeneous networks, small cells, relays and coordinated multi-point. Essentially the motivation behind these research vectors is to lower the load per base station by increasing the density, which in turn increases spectrum efficiency to users in a smaller geographic area. All of these options focus on deploying more infrastructure equipment and further increasing utilization by employing “smart” techniques (i.e., coordinated multi-point, beamforming and so on). Fundamentally, by sharing network information at the base station level, load and coverage per user can be optimized to more effectively use the existing spectrum.

A more difficult challenge is the availability of spectrum. The transition from 3G to 4G introduced new technologies for increased data throughput and reliability, but what is often overlooked is that new spectrum was introduced in conjunction with the LTE rollouts. For example, in the United States, the 700 MHz spectrum was auctioned specifically as a vehicle to deploy LTE.

This scenario also played out similarly with W-CDMA and the 3G rollout as 2G networks were pervasive and successful. 3GPP offered new coding and modulation techniques but these new technologies were largely (if not exclusively) deployed on new spectrum earmarked for those deployments.

With 5G, the answer is not so simple. Unless industry, government and associated spectrum regulating entities can agree on how and when to reallocate spectrum, there is essentially no spectrum available below 6 GHz. Reallocating spectrum is not an easy task since many service operators paid billions of dollars to acquire the spectrum already in use, and transitions are not easy or cheap.

Of particular note is the research that Dr. Ted Rappaport is doing at NYU Wireless. Dr. Rappaport has been characterizing the spectrum at 28, 38 and 60 GHz plus E-Band that covers frequencies from 71 to 76 GHz in New York City – which is a very challenging environment. These measurements show that wireless outdoor communication is possible at those frequencies although significant investment is required to make communication at these frequencies feasible.

is 28 GHz to mmWave E-Band a Challenging Design Task?

None of the options proposed to address the wireless data crunch will be simple or easy. The industry has to challenge conventional thought, which includes the design process. mmWave frequencies in particular were widely considered not suitable for cellular data and a network based on this spectrum unfeasible. Dr. Rappaport’s work has essentially challenged this thinking. He has proven that reliable transmission and reception at these frequencies is possible but there is much work to do. Essentially, all the paradigms associated with communication below 6 GHz must change, creating research opportunities in RF front end design and antennas, beamforming, physical layer design and even new protocols.

What’s encouraging is that while many of these technologies are new and have yet to be developed, there is history of rolling out new data capabilities overlaying the existing infrastructure. Even if you consider all of the research below 6 GHz in terms of physical layer, small cells, and RF front ends (MIMO), the network is still limited by the Shannon theory – a communication channel is limited by the bandwidth and noise. Heterogeneous networks will improve capacity but it is not clear that this alone will achieve 1000× capacity in 2020. If there is no available bandwidth, then new spectrum must be found somewhere.

You Mentioned a New Design Approach. Can you Elaborate on this Point?

A typical “design” approach has been to come up with an idea, simulate it and then prototype. Usually there are several iterations in the design and simulation stage before prototyping because of the large expense required to develop a working prototype. If there is a fundamental problem in the theory, then it is back to the beginning to start over again. Therefore heavy simulations are typically required before a prototype is even planned. With conventional methods, transitioning from concept to simulation to prototype takes a very long time and consumes many resources. In other words, it is very expensive. The most important goal of the design process is to deliver a working prototype sooner rather than later so that the real world conditions and system issues can be accounted for early in the design.

Most of today’s simulations mostly use additive white Gaussian noise or AWGN to model the channel. As network operators will tell you, this is simply not a realistic scenario – perhaps a good start but far from realistic. With the new technologies being investigated for 5G, conventional channel models are not good proxies for the real world. System engineers and network designers must also consider the processing requirements and the feasibility of actually deploying a new algorithm/protocol on a platform that is both cost effective and low power (to conserve battery life). Getting to a prototype sooner rather than later is very important.

Where Does National Instruments Fit Into this 5G Revolution?

National Instruments has been working with wireless researchers for a number of years through the RF/Communications Lead User program. Through this program, NI has been working directly with top researchers, such as Dr. Rappaport at NYU Wireless and also Dr. Gerhard Fettweis at TU Dresden, to explore a new approach to communications system design.

We already discussed Dr. Rappaport’s work on mmWave, but also of significance is Dr. Fettweis’ work on new physical layers for 5G. He is already prototyping a new physical layer called GFDM or General Frequency Division Multiplexing that addresses some of the shortcomings of OFDM – the standard in today’s 4G communications. Through this work, Dr. Fettweis has gone from simulation to prototype in a matter months.

The Lead User program was the idea of National Instruments’ CEO and founder, Dr. James Truchard. Dr. Truchard believes that a new design paradigm is needed for research not just in wireless but across many different areas. The tools used in research to transition from design to simulation to prototype have not really evolved over the last 20 years like so many technologies that have improved our everyday life. In particular, National Instruments focuses on a graphical system design approach to accelerate the process from design to simulation to prototype. The combination of this approach with tight hardware and software integration enables researchers to focus on their area of expertise rather than having to struggle with disparate tools and technologies that can take months and years to integrate into a working prototype.

Through the RF/Communications Lead User program, we are also working with leading researchers at commercial companies but I am not at liberty to disclose these relationships as they are confidential. However, I can say that you should stay tuned for some exciting demonstrations using NI tools and technologies on the 5G front.

James Kimery is the director of marketing for RF, communications, and software defined radio (SDR) initiatives at National Instruments. In this role, Kimery is responsible for the company’s communication system design and SDR strategies. He also manages NI’s advanced research RF/Communications Lead User program. Prior to joining NI, Kimery was the director of marketing for Silicon Laboratories’ wireless division, which later became a subsidiary of ST-Ericsson. With Kimery as director, the wireless division grew revenues from $5 million to over $250 million. The division also produced several industry innovations including the first integrated CMOS RF synthesizer and transceiver for cellular communications, the first digitally controlled crystal oscillator, and the first integrated single-chip phone (AeroFONE). The IEEE voted AeroFONE one of the top 40 innovative ICs ever developed. He also worked at NI before transitioning to Silicon Labs and led many successful programs, including the concept and launch of the PXI platform. Kimery was a founding member of the VXIplug&play Systems Alliance, the Virtual Instrument Software Architecture (VISA) working group, and the PXI Systems Alliance. He has authored over 26 technical papers and articles covering a variety of wireless and test and measurement topics. He earned a master’s degree in business administration from the University of Texas at Austin and a bachelor’s degree in electrical engineering from Texas A&M University.